Simulating Data with the Splunk Event Generator

While installing a new app to your Splunk search head can usually be considered a rather benign action, sometimes the introduction of a TA on your forwarders and indexers requires more attention. This is commonly the case, especially if your production environment is guarded by change control. The problem is that without the data generated by those inputs your newly installed app may not display properly, and without seeing your new app’s dashboards populated with data, you may not be able to conclude how useful it really is. I suppose deploying a fully mirrored “dev” environment to test upon could solve this proverbial “chicken and egg” problem, but for those without that sort of budget or license, an alternative would be to use the Splunk Event Generator.

The Splunk Event Generator is loaded like any other Splunk app, and allows the user to load sample logs or an exported csv file as event templates. These templates are used by the app to create mock events that are available with simulated timestamps. The user is also able to modify field values, and configure random variance of the specified fields, all while preserving event structure. There even exists functionality to allow a template of data to be “looped”, providing a constant stream of real-time data.

A copy of the eventgen can be found at https://github.com/splunk/eventgen. To get started with it, once the app is installed into your etc/apps/, let’s give this example a try:

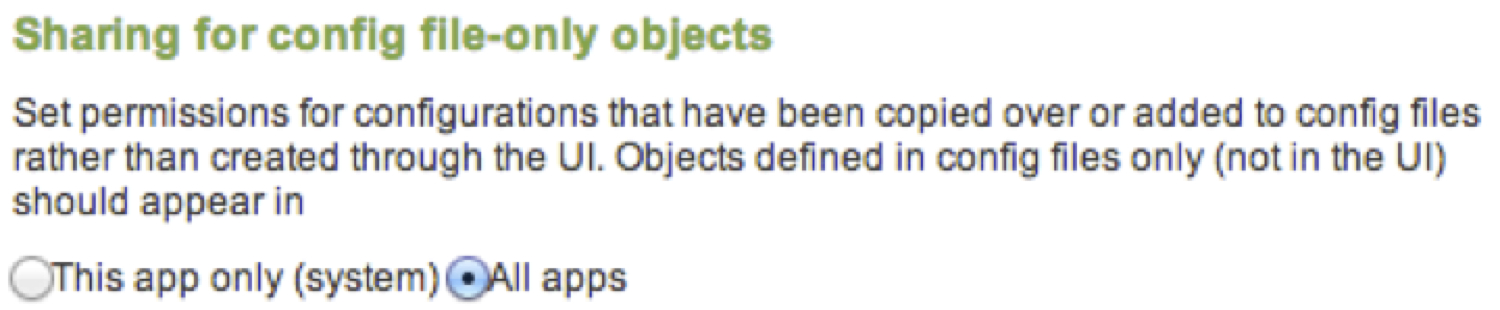

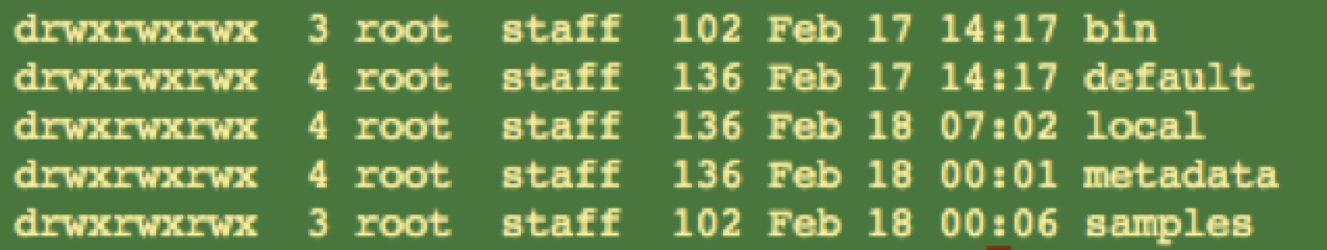

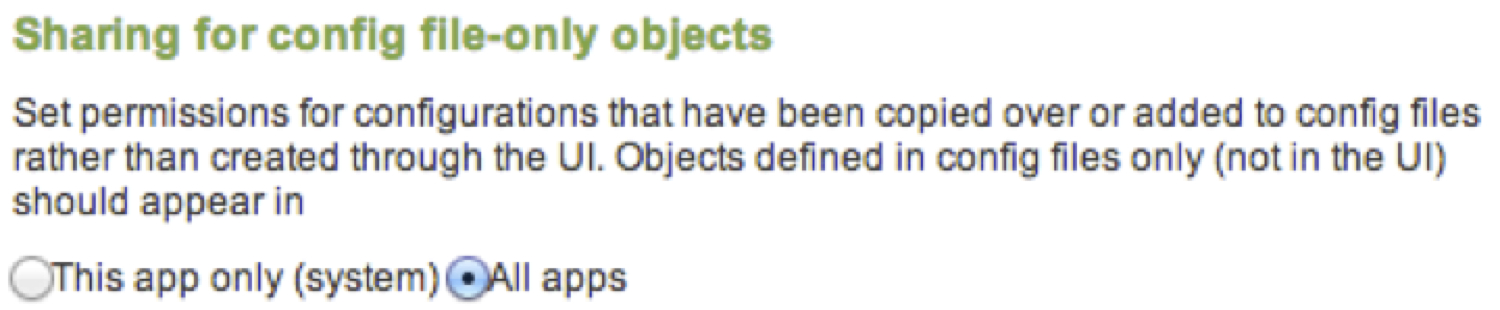

1. Create a barebones app to hold your configs. Be sure that the app’s config files are visible to all apps. Something like below should look familiar:

2. At the same level as the default and local paths, create a directory called samples. Within the samples path is where you will keep all log templates

3. For this example, we will create a simple file in the samples/ directory called sampleApacheLog with a single event:

[Wed Oct 11 14:32:52 2000] error [client 127.0.0.1] client denied by server configuration: /export/home/live/ap/htdocs/test

4. In the local path of your config app, you can now reference sampleApacheLog in an eventgen.conf file. Defined like a common configuration stanza, here you can define tokens you want your event generator to search for and replace. For examples of all options available, please see the README path of the eventgen-master app. Regular expressions are used to extract fields of interest, and in the case of a timestamps, working knowledge of strptime is also needed. For our example, pasting the stanza below into your eventgen.conf file, and restarting Splunk will begin generating the error event above with current timestamps:

[sampleApacheLog]

mode = sample

sampletype = raw

index = main

sourcetype = apache

outputMode = splunkstream

splunkHost = localhost

splunkUser = admin

splunkPass = changeme

token.0.token = \w+\s\w+\s\d{1,2}\s\d{1,2}:\d{2}:\d{2}\s\d{4}

token.0.replacementType = timestamp

token.0.replacement = %a %b %d %H:%M:%S %Y

(Please note that you may have to change your login credentials to adapt to your own Splunk instance. )

Once you have given this example a try, replace sampleApacheLog with a sample log template of your own. As long as your eventgen.conf has a stanza referencing your template, and the regular expressions and strptime format in that stanza match your event timestamps, the event generator app will produce simulated events. Even whole log files can be used as templates if you wish.

The addition of this tool to your Splunk app collection will allow you to test out apps without ever having to modify the forwarders in your production environment. However, in addition to this you may even find great value in the features of the “eventgen” when developing your own custom dashboards, by supplying yourself with a steady stream of data without intruding upon live systems. Below is the video clip that turned me onto this app, which demonstrates how to use csv files with the event generator. Perhaps you will find it as useful as I did.

Title image courtesy of dream designs / FreeDigitalPhotos.net

- Log in to post comments